|

|

| Line 1: |

Line 1: |

| − | <div class="title">OX Drive API</div> | + | <div class="title">Running a cluster</div> |

| | | | |

| | __TOC__ | | __TOC__ |

| | | | |

| − | = Introduction = | + | = Concepts = |

| | | | |

| − | The module <code>drive</code> is used to synchronize files and folders between server and client, using a server-centric approach to allow an easy implementation on the client-side.

| + | For inter-OX-communication over the network, multiple Open-Xchange servers can form a cluster. This brings different advantages regarding distribution and caching of volatile data, load balancing, scalability, fail-safety and robustness. Additionally, it provides the infrastructure for upcoming features of the Open-Xchange server. |

| | + | The clustering capabilities of the Open-Xchange server are mainly built up on [http://hazelcast.com Hazelcast], an open source clustering and highly scalable data distribution platform for Java. The following article provides an overview about the current featureset and configuration options. |

| | | | |

| − | The synchronization is based on checksums for files and folders, differences between the server- and client-side are determined using a three-way comparison of server, client and previously acknowledged file- and directory-versions. The synchronization logic is performed by the server, who instructs the client with a set of actions that should be executed in order to come to a synchronized state.

| + | = Requirements on HTTP routing = |

| | | | |

| − | Therefore, the client takes a snapshot of it's local files and directories, calculates their checksums, and sends them as a list to the server, along with a list of previously acknowledged checksums. The server takes a similar snapshot of the files and directories on the underlying file storages and evaluates which further actions are necessary for synchronization. After executing the server-side actions, the client receives a list of actions that should be executed on the client-side. These steps are repeated until the server-state matches the client-state.

| + | An OX cluster is always part of a larger picture. Usually there is front level loadbalancer as central HTTPS entry point to the platform. This loadbalancer optionally performs HTTPS termination and forwards HTTP(S) requests to webservers (the usual and only supported choice as of now is Apache). These webservers are performing HTTPS termination (if this is not happening on the loadbalancer) and serve static content, and (which is what is relevant for our discussion here) they forward dynamic requests to the OX backends. |

| | | | |

| − | Key concept is that the synchronization works stateless, i.e. it can be interrupted and restarted at any time, following the eventual consistency model.

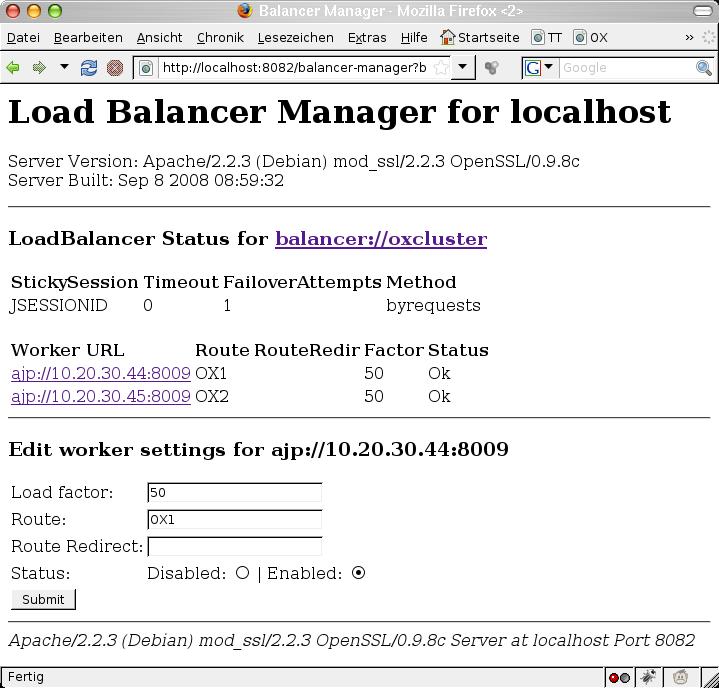

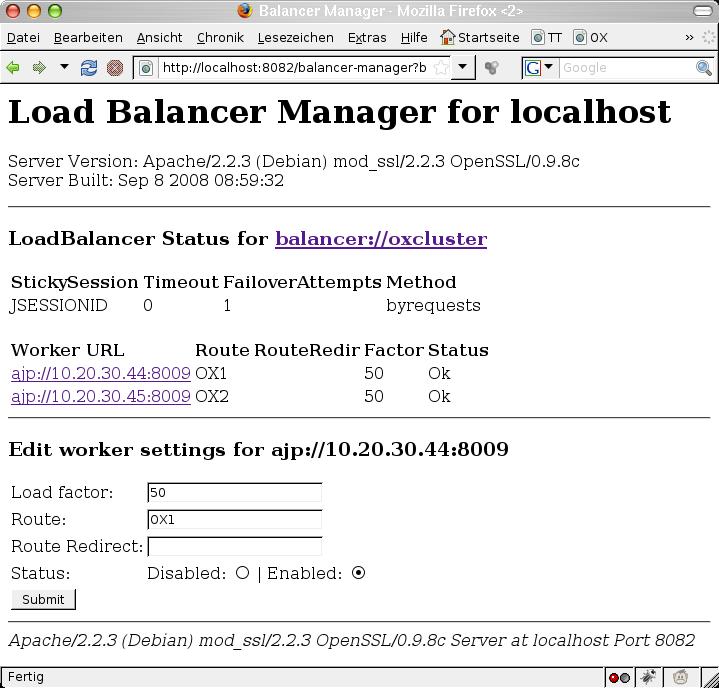

| + | A central requirement for the interaction of these components (loadbalancer, webservers, OX nodes) is that we have session stability based on the JSESSIONID cookie / jsessionid path component suffix. This means that our application sets a cookie named JSESSIONID which has a value like <large decimal number>.<route identifier>, e.g. "5661584529655240315.OX1". The route identifier here ("OX1" in this example) is taken by the OX node from a configuration setting from a config file and is specific to one OX node. HTTP routing must happen such that HTTP requests with a cookie with such a suffix always end up the corresponding OX node. There are furthermore specific cirumstances when passing this information via cookie is not possible. Then the JSESSIONID is transferred in a path component as "jsessionid=..." in the HTTP request. The routing mechanism needs to take that into account also. |

| | | | |

| − | Entry point for the synchronization is the [[#Synchronize_folders|<code>syncfolders</code>]] request, where the directories are compared, and further actions are determined by the server, amongst others actions to synchronize the files in a specific directory using the [[#Synchronize_files_in_a_folder|<code>syncfiles</code>]] request. After executing the actions, the client should send another <code>syncfolders</code> request to the server and execute the returned actions (if present), or finish the synchronization if there are no more actions to execute. In pseudo-code, the synchronization routine could be implemented as follows:

| + | There are mainly two options to implement this. If the Apache processes are running co-located on the same machines running the OX groupware processes, it is often desired to have the front level loadbalancer perform HTTP routing to the correct machines. If dedicated Apache nodes are employed, is is usually sufficient to have the front-level loadbalancer do HTTP routing to the Apache nodes in a round-robin fashion and perform routing to the correct OX nodes in the Apache nodes. |

| | | | |

| − | WHILE TRUE

| + | We provide sample configuration files to configure Apache (with mod_proxy_http) to perform HTTP routing correctly in our guides on OXpedia, e.g. [[AppSuite:Main_Page_AppSuite#quickinstall]]. Central elements are the directives "ProxySet stickysession=JSESSIONID|jsessionid scolonpathdelim=On" in conjunction with the "route=OX1" parameters to the BalancerMember lines in the Proxy definition. This is valid for Apache 2.2 as of Sep-2014. |

| − | {

| |

| − | response = SYNCFOLDERS()

| |

| − | IF 0 == response.actions.length

| |

| − | BREAK

| |

| − | ELSE

| |

| − | EXECUTE(response.actions)

| |

| − | }

| |

| | | | |

| − | Basically, it's up to the client how often such a synchronization cycle is initiated. For example, he could start a new synchronization cycle after a fixed interval, if he recognizes that the client directories have changed, or if he is informed that something has changed on the server by an event. It's also up to the client to interrupt the synchronization cycle at any time during execution of the actions and continue later on, however, it's recommended to start a new synchronization cycle each time to avoid possibly outdated actions.

| + | How to configure a front level loadbalancer to perform HTTP equivalent HTTP routing is dependent on the specific loadbalancer implementation. If Apache is used as front level loadbalancer, the same configuration as discussed in the previous section can be employed. As of time of writing this text (Sep 2014), the alternative choices are thin. F5 BigIP is reported to be able to implement "jsessionid based persistence using iRules". nginx has the functionality in their commercial "nginx plus" product. (Both of these options have not been tested by OX.) Other loadbalancers with this functionality are not known to us. |

| | | | |

| − | = API =

| + | If the front level loadbalancer is not capable of performing correct HTTP routing, is is required to configure correct HTTP routing on Apache level, even if Apache runs co-located on the OX nodes and thus cross-routing happens. |

| | | | |

| − | As part of the [[HTTP_API|HTTP API]], the basic conventions for exchanging messages that described there are also valid for this case, especially the [[HTTP_API#Low_level_protocol|low level protocol]] and [[HTTP_API#Error_handling|error handling]]. Each request against the Drive API assumes a valid server session that is uniquely identified by the session id and the corresponding cookies and are sent with each request. A new session can be created via the [[HTTP_API#Module_.22login.22|login module]].

| + | There are several reasons why we require session stability in exactly this way. We require session stabilty for horizontal scale-out; while we support transparent resuming / migration of user sessions in the OX cluster without need for users to re-authenticate, sessions wandering around randomly will consume a fixed amount resources corresponding to a running session on each OX node in the cluster, while a session sticky to one OX node will consume this fixed amount of resources only on one OX node. Furthermore there are mechanisms in OX like TokenLogin which work only of all requests beloning to one sequence get routed to the same OX node even if they stem from different machines with different IPs. Only the JSESSIONID (which in this case is transferred as jsessionid path component, as cookies do not work during a 302 redirect, which is part of this sequence) carries the required information where the request must be routed to. |

| | | | |

| − | The root folder plays another important role for the message exchange. The root folder has a unique identifier. It is the parent server folder for the synchronization. All path details for directories and files are relative to this folder. This folder's id is sent with each request. To select the root folder during initial client configuration, the client may get a list of synchronizable folders with the [[#Get_synchronizable_Folders|<code>subfolders</code>]] action.

| + | Usual "routing based on cookie hash" is not sufficient here since it disregards the information which machine originally issued the cookie. It only ensures that the session will be sticky to any target, which statistically will not be the same machine that issued the cookie. OX will then set a new JSESSIONID cookie, assuming the session had been migrated. The loadbalancer will then route the session to a different target, as the hash of the cookie will differ. This procedure then happens iteratively until by chance the routing based on cookie hash will route the session to the correct target. By then, a lot of resources will have been wasted, by creating full (short-term) sessions on all OX nodes. Furthermore, processes like TokenLogin will not work this way. |

| | | | |

| − | Subsequently all transferred objects and all possible actions are listed.

| + | = Configuration = |

| | | | |

| − | == File Version ==

| + | All settings regarding cluster setup are located in the configuration file ''hazelcast.properties''. The former used additional files ''cluster.properties'', ''mdns.properties'' and ''static-cluster-discovery.properties'' are no longer needed. The following gives an overview about the most important settings - please refer to the inline documentation of the configuration file for more advanced options. |

| | | | |

| − | A file in a directory is uniquely identified by its filename and the checksum of its content.

| + | Note: The configuration guide targets v7.4.0 of the OX server (and above). For older versions, please consult the history of this page. |

| | | | |

| − | {| id="FileVersion" cellspacing="0" border="1"

| + | == General == |

| − | |+ align="bottom" | File Version

| |

| − | ! Name !! Type !! Value

| |

| − | |-

| |

| − | | name || String || The name of the file, including its extension, e.g. <code>test.doc</code>.

| |

| − | |-

| |

| − | | checksum || String || The MD5 hash of the file, expressed as a lowercase hexadecimal number string, 32 characters long, e.g. <code>f8cacac95379527cd4fa15f0cb782a09</code>.

| |

| − | |}

| |

| | | | |

| − | == Directory Version ==

| + | To restrict access to the cluster and to separate the cluster from others in the local network, a name and password needs to be defined. Only backend nodes having the same values for those properties are able to join and form a cluster. |

| | | | |

| − | A directory is uniquely identified by its full path, relative to the root folder, and the checksum of its content.

| + | # Configures the name of the cluster. Only nodes using the same group name |

| − | | + | # will join each other and form the cluster. Required if |

| − | {| id="DirectoryVersion" cellspacing="0" border="1"

| + | # "com.openexchange.hazelcast.network.join" is not "empty" (see below). |

| − | |+ align="bottom" | Directory Version

| + | com.openexchange.hazelcast.group.name= |

| − | ! Name !! Type !! Value

| |

| − | |-

| |

| − | | path || String || The path of the directory, including the directory's name, relative to the root folder, e.g. <code>/sub/test/letters</code>.

| |

| − | |-

| |

| − | | checksum || String || The MD5 hash of the directory, expressed as a lowercase hexadecimal number string, 32 characters long, e.g. <code>f8cacac95379527cd4fa15f0cb782a09</code>.

| |

| − | |}

| |

| − | | |

| − | Note: the checksum of a directory is calculated based on its contents in the following algorithm:

| |

| − | | |

| − | * Build a list containing each file in the directory (not including subfolders or files in subfolders)

| |

| − | * Ensure a lexicographically order in the following way:

| |

| − | ** Normalize the filename using the <code>NFC</code> normalization form (canonical decomposition, followed by canonical composition) - see http://www.unicode.org/reports/tr15/tr15-23.html for details

| |

| − | ** Encode the filename to an array of UTF-8 unsigned bytes (array of codepoints)

| |

| − | ** Compare the filename (encoded as byte array "fn1") to another one "fn2" using the following comparator algorithm:

| |

| − | | |

| − | min_length = MIN(LENGTH(fn1), LENGTH(fn2))

| |

| − | FOR i = 0; i < min_length; i++

| |

| − | {

| |

| − | result = fn1[i] - fn2[i]

| |

| − | IF 0 != result RETURN result

| |

| − | }

| |

| − | RETURN LENGTH(fn1) - LENGTH(fn2)

| |

| − | | |

| − | * Calculate the aggregated MD5 checksum for the directory based on each file in the ordered list:

| |

| − | ** Append the file's NFC-normalized (see above) name, encoded as UTF-8 bytes

| |

| − | ** Append the file's MD5 checksum string, encoded as UTF-8 bytes

| |

| − | | |

| − | == Actions ==

| |

| − | | |

| − | All actions are encoded in the following format. Depending on the action type, not all properties may be present.

| |

| − | | |

| − | {| id="Actions" cellspacing="0" border="1"

| |

| − | |+ align="bottom" | Actions

| |

| − | ! Name !! Type !! Value

| |

| − | |-

| |

| − | | action || String || The type of action to execute, currently one of <code>acknowledge</code>, <code>edit</code>, <code>download</code>, <code>upload</code>, <code>remove</code>, <code>sync</code>, <code>error</code>.

| |

| − | |-

| |

| − | | version || Object || The (original) file- or directory-version referenced by the action.

| |

| − | |-

| |

| − | | newVersion || Object || The (new) file- or directory-version referenced by the action.

| |

| − | |-

| |

| − | | path || String || The path to the synchronized folder, relative to the root folder.

| |

| − | |-

| |

| − | | offset || Number || The requested start offset in bytes for file uploads.

| |

| − | |-

| |

| − | | totalLength || Number || The total length in bytes for file downloads.

| |

| − | |-

| |

| − | | contentType || String || The file's content type for downloads.

| |

| − | |-

| |

| − | | created || Timestamp || The file's creation time (always UTC, not translated into user time).

| |

| − | |-

| |

| − | | modified || Timestamp || The file's last modification time (always UTC, not translated into user time).

| |

| − | |-

| |

| − | | error || Object || The error object in case of synchronization errors.

| |

| − | |-

| |

| − | | quarantine || Boolean || The flag to indicate whether versions need to be excluded from synchronization.

| |

| − | |-

| |

| − | | reset || Boolean || The flag to indicate whether locally stored checksums should be invalidated.

| |

| − | |-

| |

| − | | stop || Boolean || The flag to signal that the client should stop the current synchronizsation cycle.

| |

| − | |-

| |

| − | | acknowledge || Boolean || The flag to signal if the client should not update it's stored checksums when performing an <code>EDIT</code> action.

| |

| − | |-

| |

| − | | thumbnailLink || String || A direct link to a small thumbnail image of the file if available (deprecated, available until API version 2).

| |

| − | |-

| |

| − | | previewLink || String || A direct link to a medium-sized preview image of the file if available (deprecated, available until API version 2).

| |

| − | |-

| |

| − | | directLink || String || A direct link to the detail view of the file in the web interface (deprecated, available until API version 2).

| |

| − | |-

| |

| − | | directLinkFragments || String || The fragments part of the direct link (deprecated, available until API version 2).

| |

| − | |}

| |

| − | | |

| − | The following list gives an overview about the used action types:

| |

| − | | |

| − | === <code>acknowledge</code> ===

| |

| − | Acknowledges the successful synchronization of a file- or directory version, i.e., the client should treat the version as synchronized by updating the corresponding entry in its metadata store and including this updated information in all following <code>originalVersions</code> arrays of the <code>syncfiles</code> / <code>syncfolders</code> actions. Depending on the <code>version</code> and <code>newVersion</code> parameters of the action, the following acknowledge operations should be executed (exemplarily for directory versions, file versions are acknowledged in the same way):

| |

| − | | |

| − | * Example 1: Acknowledge a first time synchronized directory <br /> The server sends an <code>acknowledge</code> action where the newly synchronized directory version is encoded in the <code>newVersion</code> parameter. The client should store the version in his local checksum store and send this version in the <code>originalVersions</code> array in upcoming <code>syncfolders</code> requests.

| |

| − | {

| |

| − | "action" : "acknowledge",

| |

| − | "newVersion" : {

| |

| − | "path" : "/",

| |

| − | "checksum" : "d41d8cd98f00b204e9800998ecf8427e"

| |

| − | }

| |

| − | }

| |

| − | | |

| − | * Example 2: Acknowledge a synchronized directory after updates <br /> The server sends an <code>acknowledge</code> action where the previous directory version is encoded in the <code>version</code>, and the newly synchronized directory in the <code>newVersion</code> parameter. The client should replace any previously stored entries of the directory version in his local checksum store with the updated version, and send this version in the <code>originalVersions</code> array in upcoming <code>syncfolders</code> requests.

| |

| − | {

| |

| − | "action" : "acknowledge",

| |

| − | "newVersion" : {

| |

| − | "path" : "/",

| |

| − | "checksum" : "7bb1f1a550e9b9ab4be8a12246f9d5fb"

| |

| − | },

| |

| − | "version" : {

| |

| − | "path" : "/",

| |

| − | "checksum" : "d41d8cd98f00b204e9800998ecf8427e"

| |

| − | }

| |

| − | }

| |

| − | | |

| − | * Example 3: Acknowledge the deletion of a previously synchronized directory <br /> The server sends an <code>acknowledge</code> where the <code>newVersion</code> parameter is set to <code>null</code> to acknowledge the deletion of the previously synchronized directory version as found in the <code>version</code> parameter. The client should remove any stored entries for this directory from his local checksum store, and no longer send this version in the <code>originalVersions</code> array in upcoming <code>syncfolders</code> requests. <br /> Note that an acknowledged deletion of a directory implicitly acknowledges the deletion of all contained files and subfolders, too, so the client should also remove those <code>originalVersion</code>s from his local checksum store.

| |

| − | {

| |

| − | "action" : "acknowledge",

| |

| − | "version" : {

| |

| − | "path" : "/test",

| |

| − | "checksum" : "3525d6f28eb8cb30eb61ab7932367c35"

| |

| − | }

| |

| − | }

| |

| − | | |

| − | === <code>edit</code> ===

| |

| − | Instructs the client to edit a file- or directory version. This is used for move/rename operations. The <code>version</code> parameter is set to the version as sent in the <code>clientVersions</code> array of the preceding <code>syncfiles</code>/</code>syncfolders</code> action. The <code>newVersion</code> contains the new name/path the client should use. Unless the optional boolean parameter <code>acknowledge</code> is set to <code>false</code> an <code>edit</code> action implies that the client updates its known versions store accordingly, i.e. removes the previous entry for <code>version</code> and adds a new entry for <code>newVersion</code>.

| |

| − | When editing a directory version, the client should implicitly take care to create any not exisiting subdirectories in the <code>path</code> of the <code>newVersion</code> parameter.

| |

| − | A concurrent client-side modification of the file/directory version can be detected by the client by comparing the current checksum against the one in the passed <code>newVersion</code> parameter.

| |

| − | | |

| − | * Example 1: Rename a file <br /> The server sends an <code>edit</code> action where the source file is encoded in the <code>version</code>, and the target file in the <code>newVersion</code> parameter. The client should rename the file identified by the <code>version</code> parameter to the name found in the <code>newVersion</code> parameter. Doing so, the stored checksum entry for the file in <code>version</code> should be updated, too, to reflect the changes.

| |

| − | { | |

| − | "path" : "/",

| |

| − | "action" : "edit",

| |

| − | "newVersion" : {

| |

| − | "name" : "test_1.txt",

| |

| − | "checksum" : "03395a94b57eef069d248d90a9410650"

| |

| − | },

| |

| − | "version" : {

| |

| − | "name" : "test.txt",

| |

| − | "checksum" : "03395a94b57eef069d248d90a9410650"

| |

| − | }

| |

| − | }

| |

| − | | |

| − | * Example 2: Move a directory <br /> The server sends an <code>edit</code> action where the source directory is encoded in the <code>version</code>, and the target directory in the <code>newVersion</code> parameter. The client should move the directory identified by the <code>version</code> parameter to the path found in the <code>newVersion</code> parameter. Doing so, the stored checksum entry for the directory in <code>version</code> should be updated, too, to reflect the changes.

| |

| − | {

| |

| − | "action" : "edit",

| |

| − | "newVersion" : {

| |

| − | "path" : "/test2",

| |

| − | "checksum" : "3addd6de801f4a8650c5e089769bdb62"

| |

| − | },

| |

| − | "version" : {

| |

| − | "path" : "/test1/test2",

| |

| − | "checksum" : "3addd6de801f4a8650c5e089769bdb62"

| |

| − | }

| |

| − | }

| |

| − | | |

| − | * Example 3: Rename a conflicting file <br /> The server sends an <code>edit</code> action where the original client file is encoded in the <code>version</code>, and the target filename in the <code>newVersion</code> parameter. The client should rename the file identified by the <code>version</code> parameter to the new filename found in the <code>newVersion</code> parameter. If the <code>acknowledge</code> parameter is set to <code>true</code> or is not set, the stored checksum entry for the file in <code>version</code> should be updated, too, to reflect the changes, otherwise, as in this example, no changes should be done to the stored checksums.

| |

| − | {

| |

| − | "action" : "edit",

| |

| − | "version" : {

| |

| − | "checksum" : "fade32203220752f1fa0e168889cf289",

| |

| − | "name" : "test.txt"

| |

| − | },

| |

| − | "newVersion" : {

| |

| − | "checksum" : "fade32203220752f1fa0e168889cf289",

| |

| − | "name" : "test (TestDrive).txt"

| |

| − | },

| |

| − | "acknowledge" : false,

| |

| − | "path" : "/"

| |

| − | }

| |

| − | | |

| − | === <code>download</code> ===

| |

| − | Contains information about a file version the client should download. For updates of existing files, the previous client version is supplied in the <code>version</code> parameter. For new files, the <code>version</code> parameter is omitted. The <code>newVersion</code> holds the target file version, i.e. filename and checksum, and should be used for the following <code>download</code> request. The <code>totalLength</code> parameter is set to the file size in bytes, allowing the client to recognize when a download is finished. Given the supplied checksum, the client may decide on its own if the target file needs to be downloaded from the server, or can be created by copying a file with the same checksum to the target location, e.g. from a trash folder. The file's content type can be retrieved from the <code>contentType</code> parameter, similar to the file's creation and modification times that are availble in the <code>created</code> and <code>modified</code> parameters.

| |

| − | | |

| − | * Example 1: Download a new file <br /> The server sends a <code>download</code> action where the file version to download is encoded in the <code>newVersion</code> paramter. The client should download and save the file as indicated by the <code>name</code> property of the <code>newVersion</code> in the directory identified by the supplied <code>path</code>. After downloading, the <code>newVersion</code> should be added to the client's known file versions database.

| |

| − | { | |

| − | "totalLength" : 536453,

| |

| − | "path" : "/",

| |

| − | "action" : "download",

| |

| − | "newVersion" : {

| |

| − | "name" : "test.pdf",

| |

| − | "checksum" : "3e0d7541b37d332c42a9c3adbe34aca2"

| |

| − | },

| |

| − | "contentType" : "application/pdf",

| |

| − | "created" : 1375276738232,

| |

| − | "modified" : 1375343720985

| |

| − | }

| |

| − | | |

| − | * Example 2: Download an updated file <br /> The server sends a <code>download</code> action where the previous file version is encoded in the <code>version</code>, and the file version to download in the <code>newVersion</code> parameter. The client should download and save the file as indicated by the <code>name</code> property of the <code>newVersion</code> in the directory identified by the supplied <code>path</code>, replacing the previous file. After downloading, the <code>newVersion</code> should be added to the client's known file versions database, replacing an existing entry for the previous <code>version</code>.

| |

| − | {

| |

| − | "totalLength" : 1599431,

| |

| − | "path" : "/",

| |

| − | "action" : "download",

| |

| − | "newVersion" : {

| |

| − | "name" : "test.pdf",

| |

| − | "checksum" : "bb198790904f5a1785d7402b0d8c390e"

| |

| − | },

| |

| − | "contentType" : "application/pdf",

| |

| − | "version" : {

| |

| − | "name" : "test.pdf",

| |

| − | "checksum" : "3e0d7541b37d332c42a9c3adbe34aca2"

| |

| − | },

| |

| − | "created" : 1375276738232,

| |

| − | "modified" : 1375343720985

| |

| − | }

| |

| − | | |

| − | === <code>upload</code> ===

| |

| − | Instructs the client to upload a file to the server. For updates of existing files, the previous server version is supplied in the <code>version</code> parameter, and should be used for the following <code>upload</code> request. For new files, the <code>version</code> parameter is omitted. The <code>newVersion</code> holds the target file version, i.e. filename and checksum, and should be used for the following <code>upload</code> request. When resuming a previously partly completed upload, the <code>offset</code> parameter contains the offset in bytes from which the file version should be uploaded by the client. If possible, the client should set the <code>contentType</code> parameter for the uploaded file, otherwise, the content type falls back to <code>application/octet-stream</code>.

| |

| − | | |

| − | === <code>remove</code> ===

| |

| − | Instructs the client to delete a file or directory version. The <code>version</code> parameter contains the version to delete. A deletion also implies a removal of the corresponding entry in the client's known versions store.

| |

| − | A concurrent client-side modification of the file/directory version can be detected by comparing the current checksum against the one in the passed <code>version</code> parameter.

| |

| − | | |

| − | * Example 1: Remove a file <br /> The server sends a <code>remove</code> action where the file to be removed is encoded as <code>version</code> parameter. The <code>newVersion</code> parameter is not set in the action. The client should delete the file identified by the <code>version</code> parameter. A stored checksum entry for the file in <code>version</code> should be removed, too, to reflect the changes. The <code>newVersion</code> parameter is not set in the action.

| |

| − | {

| |

| − | "path" : "/test2",

| |

| − | "action" : "remove",

| |

| − | "version" : {

| |

| − | "name" : "test.txt",

| |

| − | "checksum" : "03395a94b57eef069d248d90a9410650"

| |

| − | }

| |

| − | }

| |

| − | | |

| − | * Example 2: Remove a directory <br /> The server sends a <code>remove</code> action where the directory to be removed is encoded as <code>version</code> parameter. The <code>newVersion</code> parameter is not set in the action. The client should delete the directory identified by the <code>version</code> parameter. A stored checksum entry for the directory in <code>version</code> should be removed, too, to reflect the changes.

| |

| − | {

| |

| − | "action" : "remove",

| |

| − | "version" : {

| |

| − | "path" : "/test1",

| |

| − | "checksum" : "d41d8cd98f00b204e9800998ecf8427e"

| |

| − | }

| |

| − | }

| |

| − | | |

| − | === <code>sync</code> ===

| |

| − | The client should trigger a synchronization of the files in the directory supplied in the <code>version</code> parameter using the <code>syncfiles</code> request. A <code>sync</code> action implies the client-side creation of the referenced directory if it not yet exists, in case of a new directory on the server. \\

| |

| − | If the <code>version</code> parameter is not specified, a synchronization of all folders using the <code>syncfolders</code> request should be initiated by the client. \\

| |

| − | If the <code>reset</code> flag in the <code>SYNC</code> action is set to <code>true</code>, the client should reset his local state before synchronizing the files in the directory. This may happen when the server detects a synchronization cycle, or believes something else is going wrong. Reset means that the client should invalidate any stored original checksums for the directory itself and any contained files, so that they get re-calculated upon the next synchronization. If the <code>reset</code> flag is set in a <code>SYNC</code> action without a apecific directory version, the client should invalidate any stored checksums, so that all file- and directory-versions get re-calculated during the following synchronizations.

| |

| − | | |

| − | * Example 1: Synchronize folder <br /> The server sends a <code>sync</code> action with a <code>version</code>. The client should trigger a <code>syncfiles</code> request for the specified folder.

| |

| − | {

| |

| − | "action": "sync",

| |

| − | "version": {

| |

| − | "path": "<folder>",

| |

| − | "checksum": "<md5>"

| |

| − | }

| |

| − | }

| |

| − | | |

| − | * Example 2: Synchronize all folders <br /> The server sends a <code>sync</code> action without <code>version</code> (or version is //null//). The client should trigger a <code>syncfolder</code> request, i.e. the client should synchronize all folders.

| |

| − | {

| |

| − | "action": "sync",

| |

| − | "version": null

| |

| − | }

| |

| − | | |

| − | === <code>error</code> ===

| |

| − | With the <code>error</code> action, file- or directory versions causing a synchronization problem can be identified. The root cause of the error is encoded in the <code>error</code> parameter as described at the [[HTTP_API#Error_handling|HTTP API]].

| |

| − | | |

| − | Basically, there are two scenarios where either the errorneous version affects the synchronization state or not. For example, a file that was deleted at the client without sufficient permissions on the server can just be downloaded again by the client, and afterwards, client and server are in-sync again. On the other hand, e.g. when creating a new file at the client and this file can't be uploaded to the server due to missing permissions, the client is out of sync as long as the file is present. Therefore, the boolean parameter <code>quarantine</code> instructs the client whether the file or directory version must be excluded from the synchronization or not. If it is set to <code>true</code>, the client should exclude the version from the <code>clientVersions</code> array, and indicate the issue to the enduser. However, if the synchronization itself is not affected and the <code>quarantine</code> flag is set to <code>false</code>, the client may still indicate the issue once to the user in the background, e.g. as a balloontip notification.

| |

| − | | |

| − | The client may reset it's quarantined versions on it's own, e.g. if the user decides to "try again", or automatically after a configurable interval.

| |

| − | | |

| − | The server may also decide that further synchronization should be suspended, e.g. in case of repeated synchronization problems. Such a situation is indicated with the parameter <code>stop</code> set to <code>true</code>. In this case, the client should at least cancel the current synchronization cycle. If appropriate, the client should also be put into a 'paused' mode, and the user should be informed accordingly.

| |

| − | | |

| − | There may also be situations where a error or warning is sent to the client, independently of a file- or directory version, e.g. when the client version is outdated and a newer version is available for download.

| |

| − | | |

| − | The most common examples for errors are insufficient permissions or exceeded quota restrictions, see examples below.

| |

| − | | |

| − | * Example 1: Create a file in a read-only folder <br /> The server sends an <code>error</code> action where the errorneous file is encoded in the <code>newVersion</code> parameter and the <code>quarantine</code> flag is set to <code>true</code>. The client should exclude the version from the <code>clientVersions</code> array in upcoming <code>syncFiles</code> requests so that it doesn't affect the synchronization algorithm. The error message and further details are encoded in the <code>error</code> object of the action.

| |

| − | {

| |

| − | "error" : {

| |

| − | "category" : 3,

| |

| − | "error_params" : ["/test"],

| |

| − | "error" : "You are not allowed to create files at \"/test\"",

| |

| − | "error_id" : "1358320776-69",

| |

| − | "categories" : "PERMISSION_DENIED",

| |

| − | "code" : "DRV-0012"

| |

| − | },

| |

| − | "path" : "/test",

| |

| − | "quarantine" : true,

| |

| − | "action" : "error",

| |

| − | "newVersion" : {

| |

| − | "name" : "test.txt",

| |

| − | "checksum" : "3f978a5a54cef77fa3a4d3fe9a7047d2"

| |

| − | }

| |

| − | }

| |

| − | | |

| − | * Example 2: Delete a file without sufficient permissions <br /> Besides a new <code>download</code> action to restore the locally deleted file again, the server sends an <code>error</code> action where the errorneous file is encoded in the <code>version</code> parameter and the <code>quarantine</code> flag is set to <code>false</code>. Further synchronizations are not affected, but the client may still inform the user about the rejected operation. The error message and further details are encoded in the <code>error</code> object of the action.

| |

| − | {

| |

| − | "error" : {

| |

| − | "category" : 3,

| |

| − | "error_params" : ["test.png", "/test"],

| |

| − | "error" : "You are not allowed to delete the file \"test.png\" at \"/test\"",

| |

| − | "error_id" : "1358320776-74",

| |

| − | "categories" : "PERMISSION_DENIED",

| |

| − | "code" : "DRV-0011"

| |

| − | },

| |

| − | "path" : "/test",

| |

| − | "quarantine" : false,

| |

| − | "action" : "error",

| |

| − | "newVersion" : {

| |

| − | "name" : "test.png",

| |

| − | "checksum" : "438f06398ce968afdbb7f4db425aff09"

| |

| − | }

| |

| − | }

| |

| − | | |

| − | * Example 3: Upload a file that exceeds the quota <br /> The server sends an <code>error</code> action where the errorneous file is encoded in the <code>newVersion</code> parameter and the <code>quarantine</code> flag is set to <code>true</code>. The client should exclude the version from the <code>clientVersions</code> array in upcoming <code>syncFiles</code> requests so that it doesn't affect the synchronization algorithm. The error message and further details are encoded in the <code>error</code> object of the action.

| |

| − | {

| |

| − | "error" : {

| |

| − | "category" : 3,

| |

| − | "error_params" : [],

| |

| − | "error" : "The allowed Quota is reached",

| |

| − | "error_id" : "-485491844-918",

| |

| − | "categories" : "PERMISSION_DENIED",

| |

| − | "code" : "DRV-0016"

| |

| − | },

| |

| − | "path" : "/",

| |

| − | "quarantine" : true,

| |

| − | "action" : "error",

| |

| − | "newVersion" : {

| |

| − | "name" : "test.txt",

| |

| − | "checksum" : "0ca6033e2a9c2bea1586a2984bf111e6"

| |

| − | }

| |

| − | }

| |

| − | | |

| − | * Example 4: Synchronize with a client where the version is no longer supported. <br /> The server sends an <code>error</code> action with code <code>DRV-0028</code> and an appropriate error message. The <code>stop</code> flag is set to <code>true</code> to interrupt the synchronization cycle.

| |

| − | {

| |

| − | "stop" : true,

| |

| − | "error" : {

| |

| − | "category" : 13,

| |

| − | "error_params" : [],

| |

| − | "error" : "The client application you're using is outdated and no longer supported - please upgrade to a newer version.",

| |

| − | "error_id" : "103394512-13",

| |

| − | "categories" : "WARNING",

| |

| − | "code" : "DRV-0028",

| |

| − | "error_desc" : "Client outdated - current: \"0.9.2\", required: \"0.9.10\""

| |

| − | },

| |

| − | "quarantine" : false,

| |

| − | "action" : "error"

| |

| − | }

| |

| − | | |

| − | * Example 5: Synchronize with a client where a new version of the client application is available. <br /> The server sends an <code>error</code> action with code <code>DRV-0029</code> and an appropriate error message. The <code>stop</code> flag is set to <code>false</code> to indicate that the synchronization can continue.

| |

| − | {

| |

| − | "stop" : false,

| |

| − | "error" : {

| |

| − | "category" : 13,

| |

| − | "error_params" : [],

| |

| − | "error" : "A newer version of your client application is available for download.",

| |

| − | "error_id" : "103394512-29",

| |

| − | "categories" : "WARNING",

| |

| − | "code" : "DRV-0029",

| |

| − | "error_desc" : "Client update available - current: \"0.9.10\", available: \"0.9.12\""

| |

| − | },

| |

| − | "quarantine" : false,

| |

| − | "action" : "error"

| |

| − | }

| |

| − |

| |

| − | == Synchronize folders ==

| |

| − | | |

| − | This request performs the synchronization of all folders, resulting in different actions that should be executed on the client afterwards. This operation typically serves as an entry point for a synchronization cycle.

| |

| − | | |

| − | PUT <code>/ajax/drive?action=syncfolders</code>

| |

| − | | |

| − | Parameters:

| |

| − | * <code>session</code> - A session ID previously obtained from the login module.

| |

| − | * <code>root</code> - The ID of the referenced root folder on the server.

| |

| − | * <code>version</code> - The current client version (matching the pattern <code>^[0-9]+(\\.[0-9]+)*$</code>). If not set, the initial version <code>0</code> is assumed.

| |

| − | * <code>apiVersion</code> - The API version that the client is using. If not set, the initial version <code>0</code> is assumed.

| |

| − | * <code>diagnostics</code> (optional) - If set to <code>true</code>, an additional diagnostics trace is supplied in the response.

| |

| − | * <code>pushToken</code> (optional) - The client's push registration token to associate it to generated events.

| |

| − | | |

| − | Request Body: <br />

| |

| − | A JSON object containing two JSON arrays named <code>clientVersions</code> and <code>originalVersions</code>. The client versions array lists all current directories below the root directory as a flat list, encoded as [[#Directory_Version|Directory Versions]]. The original versions array contains all previously known directories, i.e. all previously synchronized and acknowledged directories, also encoded as [[#Directory_Version|Directory Versions]]. \\

| |

| − | Optionally, available since API version 2, the JSON object may also contain two arrays named <code>fileExclusions</code> and <code>directoryExclusions</code> to define client-side exclusion filters, with each element encoded as [[#File_pattern|File patterns]] and [[#Directory_pattern|Directory patterns]] accordingly. See [[#Client_side_filtering]] for details.

| |

| − | | |

| − | Response: <br />

| |

| − | A JSON array containing all actions the client should execute for synchronization. Each array element is an action as described in [[#Actions | Actions]]. <br /> If the <code>diagnostics</code> flag was set (either to <code>true</code> or <code>false</code>), this array is wrapped into an additional JSON object in the <code>actions</code> parameter, and the diagnostics trace is provided at <code>diagnostics</code>.

| |

| − | | |

| − | Example:

| |

| − | ==> PUT http://192.168.32.191/ajax/drive?action=syncfolders&root=56&session=5d0c1e8eb0964a3095438b450ff6810f

| |

| − | > Content:

| |

| − | {

| |

| − | "clientVersions" : [{

| |

| − | "path" : "/",

| |

| − | "checksum" : "7b744b13df4b41006495e1a15327368a"

| |

| − | }, {

| |

| − | "path" : "/test1",

| |

| − | "checksum" : "3ecc97334d7f6bf2b795988092b8137e"

| |

| − | }, {

| |

| − | "path" : "/test2",

| |

| − | "checksum" : "56534fc2ddcb3b7310d3ef889bc5ae18"

| |

| − | }, {

| |

| − | "path" : "/test2/test3",

| |

| − | "checksum" : "c193fae995d9f9431986dcdc3621cd98"

| |

| − | }

| |

| − | ],

| |

| − | "originalVersions" : [{

| |

| − | "path" : "/",

| |

| − | "checksum" : "7b744b13df4b41006495e1a15327368a"

| |

| − | }, {

| |

| − | "path" : "/test2/test3",

| |

| − | "checksum" : "c193fae995d9f9431986dcdc3621cd98"

| |

| − | }, {

| |

| − | "path" : "/test2",

| |

| − | "checksum" : "35d1b51fdefbee5bf81d7ae8167719b8"

| |

| − | }, {

| |

| − | "path" : "/test1",

| |

| − | "checksum" : "3ecc97334d7f6bf2b795988092b8137e"

| |

| − | }

| |

| − | ]

| |

| − | }

| |

| − |

| |

| − | <== HTTP 200 OK (8.0004 ms elapsed, 102 bytes received)

| |

| − | < Content:

| |

| − | {

| |

| − | "data" : [{

| |

| − | "action" : "sync",

| |

| − | "version" : {

| |

| − | "path" : "/test2",

| |

| − | "checksum" : "56534fc2ddcb3b7310d3ef889bc5ae18"

| |

| − | }

| |

| − | }

| |

| − | ]

| |

| − | }

| |

| − | | |

| − | Example 2:

| |

| − | ==> PUT http://192.168.32.191/ajax/drive?action=syncfolders&root=56&session=5d0c1e8eb0964a3095438b450ff6810f

| |

| − | > Content:

| |

| − | {

| |

| − | "clientVersions" : [{

| |

| − | "path" : "/",

| |

| − | "checksum" : "7b744b13df4b41006495e1a15327368a"

| |

| − | }, {

| |

| − | "path" : "/test1",

| |

| − | "checksum" : "3ecc97334d7f6bf2b795988092b8137e"

| |

| − | }, {

| |

| − | "path" : "/test2",

| |

| − | "checksum" : "56534fc2ddcb3b7310d3ef889bc5ae18"

| |

| − | }, {

| |

| − | "path" : "/test2/test3",

| |

| − | "checksum" : "c193fae995d9f9431986dcdc3621cd98"

| |

| − | }

| |

| − | ],

| |

| − | "originalVersions" : [{

| |

| − | "path" : "/",

| |

| − | "checksum" : "7b744b13df4b41006495e1a15327368a"

| |

| − | }, {

| |

| − | "path" : "/test2/test3",

| |

| − | "checksum" : "c193fae995d9f9431986dcdc3621cd98"

| |

| − | }, {

| |

| − | "path" : "/test2",

| |

| − | "checksum" : "35d1b51fdefbee5bf81d7ae8167719b8"

| |

| − | }, {

| |

| − | "path" : "/test1",

| |

| − | "checksum" : "3ecc97334d7f6bf2b795988092b8137e"

| |

| − | }

| |

| − | ]

| |

| − | "fileExclusions" : [{

| |

| − | "path" : "/",

| |

| − | "name" : "excluded.txt",

| |

| − | "type" : "exact"

| |

| − | }

| |

| − | ], "directoryExclusions" : [{

| |

| − | "path" : "/temp",

| |

| − | "type" : "exact"

| |

| − | }, {

| |

| − | "path" : "/temp/*",

| |

| − | "type" : "glob"

| |

| − | }

| |

| − | ]

| |

| − | }

| |

| − |

| |

| − | <== HTTP 200 OK (8.0004 ms elapsed, 102 bytes received)

| |

| − | < Content: | |

| − | {

| |

| − | "data" : [{

| |

| − | "action" : "sync",

| |

| − | "version" : {

| |

| − | "path" : "/test2",

| |

| − | "checksum" : "56534fc2ddcb3b7310d3ef889bc5ae18"

| |

| − | }

| |

| − | }

| |

| − | ]

| |

| − | }

| |

| − | | |

| − | | |

| − | == Synchronize files in a folder ==

| |

| − | | |

| − | This request performs the synchronization of a single folder, resulting in different actions that should be executed on the client afterwards. This action is typically executed as result of a <code>syncfolders</code> action.

| |

| − | | |

| − | PUT <code>/ajax/drive?action=syncfiles</code>

| |

| − | | |

| − | Parameters:

| |

| − | * <code>session</code> - A session ID previously obtained from the login module.

| |

| − | * <code>root</code> - The ID of the referenced root folder on the server.

| |

| − | * <code>path</code> - The path to the synchronized folder, relative to the root folder.

| |

| − | * <code>device</code> (optional) - A friendly name identifying the client device from a user's point of view, e.g. "My Tablet PC".

| |

| − | * <code>apiVersion</code> - The API version that the client is using. If not set, the initial version <code>0</code> is assumed.

| |

| − | * <code>diagnostics</code> (optional) - If set to <code>true</code>, an additional diagnostics trace is supplied in the response.

| |

| − | * <code>columns</code> (optional) - A comma-separated list of columns representing additional metadata that is relevant for the client. Each column is specified by a numeric column identifier. Column identifiers for file metadata are defined in [[#File Metadata]]. If available, the requested metadata of files is included in the corresponsing <code>DOWNLOAD</code> and <code>ACKNOWLEDGE</code> actions (deprecated, available until API version 2).

| |

| − | * <code>pushToken</code> (optional) - The client's push registration token to associate it to generated events.

| |

| − | | |

| − | Request Body: <br />

| |

| − | A JSON object containing two JSON arrays named <code>clientVersions</code> and <code>originalVersions</code>. The client versions array lists all current files in the client directory, encoded as [[#File Version | File Versions]]. The original versions array contains all previously known files, i.e. all previously synchronized and acknowledged files, also encoded as [[#File Version | File Versions]]. \\

| |

| − | Optionally, available since API version 2, the JSON object may also contain an array named <code>fileExclusions</code> to define client-side exclusion filters, with each element encoded as [[#File pattern | File patterns]]. See [[#Client side filtering]] for details.

| |

| − | | |

| − | Response: <br />

| |

| − | A JSON array containing all actions the client should execute for synchronization. Each array element is an action as described in [[#Actions | Actions]]. <br /> If the <code>diagnostics</code> flag was set (either to <code>true</code> or <code>false</code>), this array is wrapped into an additional JSON object in the <code>actions</code> parameter, and the diagnostics trace is provided at <code>diagnostics</code>.

| |

| − | | |

| − | Example:

| |

| − | ==> PUT http://192.168.32.191/ajax/drive?action=syncfiles&root=56&path=/test2&device=Laptop&session=5d0c1e8eb0964a3095438b450ff6810f

| |

| − | > Content:

| |

| − | {

| |

| − | "clientVersions" : [{

| |

| − | "name" : "Jellyfish.jpg",

| |

| − | "checksum" : "5a44c7ba5bbe4ec867233d67e4806848"

| |

| − | }, {

| |

| − | "name" : "Penguins.jpg",

| |

| − | "checksum" : "9d377b10ce778c4938b3c7e2c63a229a"

| |

| − | }

| |

| − | ],

| |

| − | "originalVersions" : [{

| |

| − | "name" : "Jellyfish.jpg",

| |

| − | "checksum" : "5a44c7ba5bbe4ec867233d67e4806848"

| |

| − | }

| |

| − | ]

| |

| − | }

| |

| | | | |

| − | <== HTTP 200 OK (6.0004 ms elapsed, 140 bytes received) | + | # The password used when joining the cluster. Defaults to "wtV6$VQk8#+3ds!a". |

| − | < Content:

| + | # Please change this value, and ensure it's equal on all nodes in the cluster. |

| − | {

| + | com.openexchange.hazelcast.group.password=wtV6$VQk8#+3ds!a |

| − | "data" : [{

| |

| − | "path" : "/test2",

| |

| − | "action" : "upload",

| |

| − | "newVersion" : {

| |

| − | "name" : "Penguins.jpg",

| |

| − | "checksum" : "9d377b10ce778c4938b3c7e2c63a229a"

| |

| − | },

| |

| − | "offset" : 0

| |

| − | }

| |

| − | ]

| |

| − | }

| |

| | | | |

| − | Example 2:

| + | == Network == |

| − | ==> PUT http://192.168.32.191/ajax/drive?action=syncfiles&root=56&path=/test2&device=Laptop&session=5d0c1e8eb0964a3095438b450ff6810f

| |

| − | > Content:

| |

| − | {

| |

| − | "clientVersions" : [{

| |

| − | "name" : "Jellyfish.jpg",

| |

| − | "checksum" : "5a44c7ba5bbe4ec867233d67e4806848"

| |

| − | }, {

| |

| − | "name" : "Penguins.jpg",

| |

| − | "checksum" : "9d377b10ce778c4938b3c7e2c63a229a"

| |

| − | }

| |

| − | ],

| |

| − | "originalVersions" : [{

| |

| − | "name" : "Jellyfish.jpg",

| |

| − | "checksum" : "5a44c7ba5bbe4ec867233d67e4806848"

| |

| − | }

| |

| − | ]

| |

| − | "fileExclusions" : [{

| |

| − | "path" : "*",

| |

| − | "name" : "*.tmp",

| |

| − | "type" : "glob"

| |

| − | }

| |

| − | ]

| |

| − | }

| |

| − |

| |

| − | <== HTTP 200 OK (6.0004 ms elapsed, 140 bytes received)

| |

| − | < Content:

| |

| − | {

| |

| − | "data" : [{

| |

| − | "path" : "/test2",

| |

| − | "action" : "upload",

| |

| − | "newVersion" : {

| |

| − | "name" : "Penguins.jpg",

| |

| − | "checksum" : "9d377b10ce778c4938b3c7e2c63a229a"

| |

| − | },

| |

| − | "offset" : 0

| |

| − | }

| |

| − | ]

| |

| − | }

| |

| | | | |

| − | == Download a file ==

| + | It's required to define the network interface that is used for cluster communication via ''com.openexchange.hazelcast.network.interfaces''. By default, the interface is restricted to the local loopback address only. To allow the same configuration amongst all nodes in the cluster, it's recommended to define the value using a wildcard matching the IP addresses of all nodes participating in the cluster, e.g. ''192.168.0.*'' |

| | | | |

| − | Downloads a file from the server.

| + | # Comma-separated list of interface addresses hazelcast should use. Wildcards |

| | + | # (*) and ranges (-) can be used. Leave blank to listen on all interfaces |

| | + | # Especially in server environments with multiple network interfaces, it's |

| | + | # recommended to specify the IP-address of the network interface to bind to |

| | + | # explicitly. Defaults to "127.0.0.1" (local loopback only), needs to be |

| | + | # adjusted when building a cluster of multiple backend nodes. |

| | + | com.openexchange.hazelcast.network.interfaces=127.0.0.1 |

| | | | |

| − | GET <code>/ajax/drive?action=download</code>

| + | To form a cluster of multiple OX server nodes, different discovery mechanisms can be used. The discovery mechanism is specified via the property ''com.openexchange.hazelcast.network.join'': |

| | | | |

| − | or | + | # Specifies which mechanism is used to discover other backend nodes in the |

| | + | # cluster. Possible values are "empty" (no discovery for single-node setups), |

| | + | # "static" (fixed set of cluster member nodes) or "multicast" (automatic |

| | + | # discovery of other nodes via multicast). Defaults to "empty". Depending on |

| | + | # the specified value, further configuration might be needed, see "Networking" |

| | + | # section below. |

| | + | com.openexchange.hazelcast.network.join=empty |

| | | | |

| − | PUT <code>/ajax/drive?action=download</code>

| + | Generally, it's advised to use the same network join mechanism for all nodes in the cluster, and, in most cases, it's strongly recommended to use a ''static'' network join configuration. This will allow the nodes to join the cluster directly upon startup. With a ''multicast'' based setup, nodes will merge to an existing cluster possibly at some later time, thus not being able to access the distributed data until they've joined. |

| | | | |

| − | Parameters:

| + | Depending on the network join setting, further configuration may be necessary, as decribed in the following paragraphs. |

| − | * <code>session</code> - A session ID previously obtained from the login module.

| |

| − | * <code>root</code> - The ID of the referenced root folder on the server.

| |

| − | * <code>path</code> - The path to the synchronized folder, relative to the root folder.

| |

| − | * <code>name</code> - The name of the file version to download.

| |

| − | * <code>checksum</code> - The checksum of the file version to download.

| |

| − | * <code>apiVersion</code> - The API version that the client is using. If not set, the initial version <code>0</code> is assumed.

| |

| − | * <code>offset</code> (optional) - The start offset in bytes for the download. If not defined, an offset of <code>0</code> is assumed.

| |

| − | * <code>length</code> (optional) - The number of bytes to include in the download stream. If not defined, the file is read until the end.

| |

| | | | |

| − | Request Body: <br />

| + | === empty === |

| − | Optionally, available since API version 3, if client-side file- and/or directory exclusion filters are active, a PUT request can be used. The request body then holds a JSON object containing two arrays named <code>fileExclusions</code> and <code>directoryExclusions</code> to define client-side exclusion filters, with each element encoded as [[File_pattern|File patterns]] and [[Directory_pattern|Directory patterns]] accordingly. See [[Client_side_filtering|Client side filtering]] for details.

| |

| | | | |

| − | Response: <br />

| + | When using the default value ''empty'', no other nodes are discovered in the cluster. This value is suitable for single-node installations. Note that other nodes that are configured to use other network join mechanisms may be still able to still to connect to this node, e.g. using a ''static'' network join, having the IP address of this host in the list of potential cluster members (see below). |

| − | The binary content of the requested file version. Note that in case of errors, an exception is not encoded in the default JSON error format here. Instead, an appropriate HTTP error with a status code != 200 is returned. For example, in case of the requested file being deleted or modified in the meantime, a response with HTTP status code 404 (not found) is sent.

| |

| | | | |

| − | Example:

| + | === static === |

| − | ==> GET http://192.168.32.191/ajax/drive?action=download&root=56&path=/test2&name=Jellyfish.jpg&checksum=5a44c7ba5bbe4ec867233d67e4806848&offset=0&length=-1&session=5d0c1e8eb0964a3095438b450ff6810f

| |

| − |

| |

| − | <== HTTP 200 OK (20.0011 ms elapsed, 775702 bytes received)

| |

| | | | |

| − | == Upload a file ==

| + | The most common setting for ''com.openexchange.hazelcast.network.join'' is ''static''. A static cluster discovery uses a fixed list of IP addresses of the nodes in the cluster. During startup and after a specific interval, the underlying Hazelcast library probes for not yet joined nodes from this list and adds them to the cluster automatically. The address list is configured via ''com.openexchange.hazelcast.network.join.static.nodes'': |

| | | | |

| − | Uploads a file to the server.

| + | # Configures a comma-separated list of IP addresses / hostnames of possible |

| | + | # nodes in the cluster, e.g. "10.20.30.12, 10.20.30.13:5701, 192.178.168.110". |

| | + | # Only used if "com.openexchange.hazelcast.network.join" is set to "static". |

| | + | # It doesn't hurt if the address of the local host appears in the list, so |

| | + | # that it's still possible to use the same list throughout all nodes in the |

| | + | # cluster. |

| | + | com.openexchange.hazelcast.network.join.static.nodes= |

| | | | |

| − | PUT <code>/ajax/drive?action=upload</code>

| + | For a fixed set of backend nodes, it's recommended to simply include the IP addresses of all nodes in the list, and use the same configuration for each node. However, it's only required to add the address of at least one other node in the cluster to allow the node to join the cluster. Also, when adding a new node to the cluster and this list is extended accordingly, existing nodes don't need to be shut down to recognize the new node, as long as the new node's address list contains at least one of the already running nodes. |

| | | | |

| − | Parameters:

| + | === multicast === |

| − | * <code>session</code> - A session ID previously obtained from the login module.

| |

| − | * <code>root</code> - The ID of the referenced root folder on the server.

| |

| − | * <code>path</code> - The path to the synchronized folder, relative to the root folder.

| |

| − | * <code>newName</code> - The target name of the file version to upload.

| |

| − | * <code>newChecksum</code> - The target checksum of the file version to upload.

| |

| − | * <code>name</code> (optional) - The previous name of the file version being uploaded. Only set when uploading an updated version of an existing file to the server.

| |

| − | * <code>checksum</code> - The previous checksum of the file version to upload. Only set when uploading an updated version of an existing file to the server.

| |

| − | * <code>apiVersion</code> - The API version that the client is using. If not set, the initial version <code>0</code> is assumed.

| |

| − | * <code>contentType</code> (optional) - The content type of the file. If not defined, <code>application/octet-stream</code> is assumed.

| |

| − | * <code>offset</code> (optional) - The start offset in bytes for the upload when resuming a previous partial upload. If not defined, an offset of <code>0</code> is assumed.

| |

| − | * <code>totalLength</code> (optional) - The total expected length of the file (required to support resume of uploads). If not defined, the upload is assumed completed after the operation.

| |

| − | * <code>created</code> (optional) - The creation time of the file as timestamp.

| |

| − | * <code>modified</code> (optional) - The last modification time of the file as timestamp. Defaults to the current server time if no value or a value larger than the current time is supplied.

| |

| − | * <code>binary</code> - Expected to be set to <code>true</code> to indicate the binary content.

| |

| − | * <code>device</code> (optional) - A friendly name identifying the client device from a user's point of view, e.g. "My Tablet PC".

| |

| − | * <code>diagnostics</code> (optional) - If set to <code>true</code>, an additional diagnostics trace is supplied in the response.

| |

| − | * <code>pushToken</code> (optional) - The client's push registration token to associate it to generated events.

| |

| | | | |

| − | Request body: <br />

| + | For highly dynamic setups where nodes are added and removed from the cluster quite often and/or the host's IP addresses are not fixed, it's also possible to configure the network join via multicast. During startup and after a specific interval, the backend nodes initiate the multicast join process automatically, and discovered nodes form or join the cluster afterwards. The multicast group and port can be configured as follows: |

| − | The binary content of the uploaded file version.

| |

| | | | |

| − | Response: <br />

| + | # Configures the multicast address used to discover other nodes in the cluster |

| − | A JSON array containing all actions the client should execute for synchronization. Each array element is an action as described in [[#Actions | Actions]]. <br /> If the <code>diagnostics</code> flag was set (either to <code>true</code> or <code>false</code>), this array is wrapped into an additional JSON object in the <code>actions</code> parameter, and the diagnostics trace is provided at <code>diagnostics</code>.

| + | # dynamically. Only used if "com.openexchange.hazelcast.network.join" is set |

| − | | + | # to "multicast". If the nodes reside in different subnets, please ensure that |

| − | Example:

| + | # multicast is enabled between the subnets. Defaults to "224.2.2.3". |

| − | ==> PUT http://192.168.32.191/ajax/drive?action=upload&root=56&path=/test2&newName=Penguins.jpg&newChecksum=9d377b10ce778c4938b3c7e2c63a229a&contentType=image/jpeg&offset=0&totalLength=777835&binary=true&device=Laptop&created=1375343426999&modified=1375343427001&session=5d0c1e8eb0964a3095438b450ff6810f | + | com.openexchange.hazelcast.network.join.multicast.group=224.2.2.3 |

| − | > Content:

| |

| − | [application/octet-stream;, 777835 bytes]

| |

| | | | |

| − | <== HTTP 200 OK (108.0062 ms elapsed, 118 bytes received) | + | # Configures the multicast port used to discover other nodes in the cluster |

| − | < Content: | + | # dynamically. Only used if "com.openexchange.hazelcast.network.join" is set |

| − | {

| + | # to "multicast". Defaults to "54327". |

| − | "data" : [{

| + | com.openexchange.hazelcast.network.join.multicast.port=54327 |

| − | "action" : "acknowledge",

| |

| − | "newVersion" : {

| |

| − | "name" : "Penguins.jpg",

| |

| − | "checksum" : "9d377b10ce778c4938b3c7e2c63a229a"

| |

| − | }

| |

| − | }

| |

| − | ]

| |

| − | }

| |

| − | | |

| − | == Listen for changes (long polling) ==

| |

| − | | |

| − | Listens for server-side changes. The request blocks until new actions for the client are available, or the specified waiting time elapses. May return immediately if previously received but not yet processed actions are available for this client.

| |

| − | | |

| − | GET <code>/ajax/drive?action=listen</code>

| |

| | | | |

| − | Parameters:

| + | == Example == |

| − | * <code>session</code> - A session ID previously obtained from the login module.

| |

| − | * <code>root</code> - The ID of the referenced root folder on the server.

| |

| − | * <code>timeout</code> (optional) - The maximum timeout in milliseconds to wait.

| |

| − | * <code>pushToken</code> (optional) - The client's push registration token to associate it to generated events.

| |

| | | | |

| − | Response: <br />

| + | The following example shows how a simple cluster named ''MyCluster'' consisting of 4 backend nodes can be configured using ''static'' cluster discovery. The node's IP addresses are 10.0.0.15, 10.0.0.16, 10.0.0.17 and 10.0.0.18. Note that the same ''hazelcast.properties'' is used by all nodes. |

| − | A JSON array containing all actions the client should execute for synchronization. Each array element is an action as described in [[#Actions | Actions]]. If there no changes were detected, an empty array is returned. Typically, the client will continue with the next <code>listen</code> request after the response was processed.

| |

| | | | |

| − | Example:

| + | com.openexchange.hazelcast.group.name=MyCluster |

| − | ==> GET http://192.168.32.191/ajax/drive?action=listen&root=65841&session=51378e29f82042b4afe4af1c034c6d68 | + | com.openexchange.hazelcast.group.password=secret |

| − | | + | com.openexchange.hazelcast.network.join=static |

| − | <== HTTP 200 OK (63409.6268 ms elapsed, 28 bytes received) | + | com.openexchange.hazelcast.network.join.static.nodes=10.0.0.15,10.0.0.16,10.0.0.17,10.0.0.18 |

| − | < Content: | + | com.openexchange.hazelcast.network.interfaces=10.0.0.* |

| − | {

| |

| − | "data" : [{

| |

| − | "action" : "sync",

| |

| − | }

| |

| − | ]

| |

| − | }

| |

| | | | |

| − | == Get quota ==

| |

| | | | |

| − | Gets the quota limits and current usage for the storage the supplied root folder belongs to. Depending on the filestore configuration, this may include both restrictions on the number of allowed files and the total size of all contained files in bytes. If there's no limit, -1 is returned.

| + | == Advanced Configuration == |

| | | | |

| − | GET <code>/ajax/drive?action=quota</code>

| + | === Custom Partitioning (preliminary) === |

| | | | |

| − | Parameters:

| + | While originally being desgined to separate the nodes holding distributed data into different risk groups for increased fail safety, a custom partioning strategy may also be used to distinguish between nodes holding distributed data from those who should not. |

| − | * <code>session</code> - A session ID previously obtained from the login module.

| |

| − | * <code>root</code> - The ID of the referenced root folder on the server.

| |

| | | | |

| − | Response: <br />

| + | This approach of custom partitioning may be used in a OX cluster, where usually different backend nodes serve different purposes. A common scenario is that there are nodes handling requests from the web interfaces, and others being responsible for USM/EAS traffic. Due to their nature of processing large chunks of synchronization data in memory, the USM/EAS nodes may encounter small delays when the Java garbage collector kicks in and suspends the Java Virtual Machine. Since those delays may also have an influence on hazelcast-based communication in the cluster, the idea is to instruct hazelcast to not store distributed data on that nodes. This is where a custom partitioning scheme comes into play. |

| − | A JSON object containing the quota restrictions inside a JSON array with the property name <code>quota</code>. The JSON array contains zero, one or two <code>quota</code> objects as described below, depending on the filestore configuration. If one or more quota <code>type</code>s are missing in the array, the client can expect that there are no limitations for that type. Besides the array, the JSON object also contains a hyperlink behind the <code>manageLink</code> parameter, pointing to an URL where the user could manage his quota restrictions. | |

| | | | |

| − | {| id="Quota" cellspacing="0" border="1"

| + | To setup a custom paritioning scheme in the cluster, an additional ''hazelcast.xml'' configuration file is used, which should be placed into the ''hazelcast'' subdirectory of the OX configuration folder, usually at ''/opt/openexchange/etc/hazelcast''. Please note that it's vital that each node in the cluster is configured equally here, so the same ''hazelcast.xml'' file should be copied to each server. The configuration read from there is used as basis for all further settings that are taken from the ordinary ''hazelcast.properties'' config file. |

| − | |+ align="bottom" | Quota

| |

| − | ! Name !! Type !! Value

| |

| − | |-

| |

| − | | limit || Number || The allowed limit (either number of files or sum of filesizes in bytes).

| |

| − | |-

| |

| − | | use || Number || The current usage (again either number of files or sum of filesizes in bytes).

| |

| − | |-

| |

| − | | type || String || The kind of quota restriction, currently either <code>storage</code> (size of contained files in bytes) or <code>file</code> (number of files).

| |

| − | |}

| |

| | | | |

| − | Example:

| + | To setup a custom paritioning scheme, the partition groups must be defined in the ''hazelcast.xml'' file. See the following file for an example configuration, where the three nodes ''10.10.10.60'', ''10.10.10.61'' and ''10.10.10.62'' are defined to form an own paritioning group each. Doing so, all distributed data will be stored at one of those nodes physically, while the corresponding backup data (if configured) at one of the other two nodes. All other nodes in the cluster will not be used to store distributed data, but will still be "full" hazelcast members, which is necessary for other cluster-wide operations the OX backends use. |

| − | ==> GET http://192.168.32.191/ajax/drive?action=quota&root=56&session=35cb8c2d1423480692f0d5053d14ba52

| |

| − |

| |

| − | <== HTTP 200 OK (9.6854 ms elapsed, 113 bytes received)

| |

| − | < Content:

| |

| − | {

| |

| − | "data" : {

| |

| − | "quota" : [{

| |

| − | "limit" : 107374182400,

| |

| − | "use" : 1109974882,

| |

| − | "type" : "storage"

| |

| − | }, {

| |

| − | "limit" : 800000000000,

| |

| − | "use" : 1577,

| |

| − | "type" : "file"

| |

| − | }

| |

| − | ],

| |

| − | "manageLink" : "https://www.example.com/manageQuota"

| |

| − | }

| |

| − | }

| |

| | | | |

| − | == Get Settings ==

| + | Please note that the configured backup count in the map configurations should be smaller than the number of nodes here, otherwise, there may be problems if one of those data nodes is shut down temporarily for maintenance. So, the minimum number of nodes to define in the partition group sections is implicitly bound to the sum of a map's ''backupCount'' and ''asyncBackupCount'' properties, plus ''1'' for the original data partition. |

| | | | |

| − | Gets various settings applicable for the drive clients.

| |

| | | | |

| − | GET <code>/ajax/drive?action=settings</code>

| + | <?xml version="1.0" encoding="UTF-8"?> |

| − | | + | <!-- |

| − | Parameters:

| + | ~ Copyright (c) 2008-2013, Hazelcast, Inc. All Rights Reserved. |

| − | * <code>session</code> - A session ID previously obtained from the login module.

| + | ~ |

| − | * <code>root</code> - The ID of the referenced root folder on the server.

| + | ~ Licensed under the Apache License, Version 2.0 (the "License"); |

| − | * <code>language</code> (optional) - The locale to use for language-sensitive settings (in the format <code><2-letter-language>_<2-letter-region></code>, e.g. <code>de_CH</code> or <code>en_GB</code>). Defaults to the user's configured locale on the server.

| + | ~ you may not use this file except in compliance with the License. |

| − | | + | ~ You may obtain a copy of the License at |

| − | Response:<br />

| + | ~ |

| − | A JSON object holding the settings as described below. This also includes a JSON array with the property name <code>quota</code> that contains zero, one or two quota objects as described below, depending on the filestore configuration. If one or more quota types are missing in the array, the client can expect that there are no limitations for that type.

| + | ~ http://www.apache.org/licenses/LICENSE-2.0 |

| − | | + | ~ |

| − | {| id="Quota" cellspacing="0" border="1"

| + | ~ Unless required by applicable law or agreed to in writing, software |

| − | |+ align="bottom" | Quota

| + | ~ distributed under the License is distributed on an "AS IS" BASIS, |

| − | ! Name !! Type !! Value

| + | ~ WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. |

| − | |-

| + | ~ See the License for the specific language governing permissions and |

| − | | limit || Number || The allowed limit (either number of files or sum of filesizes in bytes).

| + | ~ limitations under the License. |

| − | |-

| + | --> |

| − | | use || Number || The current usage (again either number of files or sum of filesizes in bytes).

| |

| − | |-

| |

| − | | type || String || The kind of quota restriction, currently either <code>storage</code> (size of contained files in bytes) or <code>file</code> (number of files).

| |

| − | |}

| |

| − | | |

| − | {| id="Settings" cellspacing="0" border="1"

| |

| − | |+ align="bottom" | Settings

| |

| − | ! Name !! Type !! Value

| |

| − | |-

| |

| − | | helpLink || String || A hyperlink to the online help.

| |

| − | |-

| |

| − | | quotaManageLink || String || A hyperlink to an URL where the user could manage his quota restrictions.

| |

| − | |-

| |

| − | | quota || Array || A JSON array containing the quota restrictions as described above.

| |

| − | |-