AppSuite:Running a cluster: Difference between revisions

No edit summary |

|||

| Line 2: | Line 2: | ||

= Concepts = | = Concepts = | ||

For inter-OX-communication over the network, multiple Open-Xchange servers can form a cluster. This brings different advantages regarding distribution and caching of volatile data, load balancing, scalability, fail-safety and robustness. Additionally, it provides the | For inter-OX-communication over the network, multiple Open-Xchange servers can form a cluster. This brings different advantages regarding distribution and caching of volatile data, load balancing, scalability, fail-safety and robustness. Additionally, it provides the infrastructure for upcoming features of the Open-Xchange server. | ||

The clustering capabilities of the Open-Xchange server are mainly built up on [http://hazelcast.com Hazelcast], an open source clustering and highly scalable data distribution platform for Java. The following article provides an overview about the current featureset and configuration options. | The clustering capabilities of the Open-Xchange server are mainly built up on [http://hazelcast.com Hazelcast], an open source clustering and highly scalable data distribution platform for Java. The following article provides an overview about the current featureset and configuration options. | ||

= | = Configuration (v7.4.0 and above) = | ||

All settings regarding cluster setup are located in the configuration file ''hazelcast.properties''. The former used additional files ''cluster.properties'', ''mdns.properties'' and ''static-cluster-discovery.properties'' are no longer needed. The following gives an overview about the most important settings - please refer to the inline documentation of the configuration file for more advanced options. | |||

== General == | |||

To restrict access to the cluster and to separate the cluster from others in the local network, a name and password needs to be defined. Only backend nodes having the same values for those properties are able to join and form a cluster. | |||

# Configures the name of the cluster. Only nodes using the same group name | |||

# will join each other and form the cluster. Required if | |||

# "com.openexchange.hazelcast.network.join" is not "empty" (see below). | |||

com.openexchange.hazelcast.group.name= | |||

# The password used when joining the cluster. Defaults to "wtV6$VQk8#+3ds!a". | |||

# Please change this value, and ensure it's equal on all nodes in the cluster. | |||

com.openexchange.hazelcast.group.password=wtV6$VQk8#+3ds!a | |||

== Network == | |||

It's required to define the network interface that is used for cluster communication via ''com.openexchange.hazelcast.network.interfaces''. By default, the interface is restricted to the local loopback address only. To allow the same configuration amongst all nodes in the cluster, it's recommended to define the value using a wildcard matching the IP addresses of all nodes participating in the cluster, e.g. ''192.168.0.*'' | |||

# Comma-separated list of interface addresses hazelcast should use. Wildcards | |||

# (*) and ranges (-) can be used. Leave blank to listen on all interfaces | |||

# Especially in server environments with multiple network interfaces, it's | |||

# recommended to specify the IP-address of the network interface to bind to | |||

# explicitly. Defaults to "127.0.0.1" (local loopback only), needs to be | |||

# adjusted when building a cluster of multiple backend nodes. | |||

com.openexchange.hazelcast.network.interfaces=127.0.0.1 | |||

To form a cluster of multiple OX server nodes, different discovery mechanisms can be used. The discovery mechanism is specified via the property ''com.openexchange.hazelcast.network.join'': | |||

# Specifies which mechanism is used to discover other backend nodes in the | |||

# cluster. Possible values are "empty" (no discovery for single-node setups), | |||

# "static" (fixed set of cluster member nodes) or "multicast" (automatic | |||

# discovery of other nodes via multicast). Defaults to "empty". Depending on | |||

# the specified value, further configuration might be needed, see "Networking" | |||

# section below. | |||

com.openexchange.hazelcast.network.join=empty | |||

Generally, it's recommended to use the same network join mechanism for all nodes in the cluster. Depending on this setting, further configuration may be necessary, as decribed in the following paragraphs. | |||

=== empty === | |||

When using the default value ''empty'', no other nodes are discovered in the cluster. This value is suitable for single-node installations. Note that other nodes that are configured to use other network join mechanisms may be still able to still to connect to this node, e.g. using a ''static'' network join, having the IP address of this host in the list of potential cluster members (see below). | |||

=== static === | |||

The most common setting for ''com.openexchange.hazelcast.network.join'' is ''static''. A static cluster discovery uses a fixed list of IP addresses of the nodes in the cluster. During startup and after a specific interval, the underlying Hazelcast library probes for not yet joined nodes from this list and adds them to the cluster automatically. The address list is configured via ''com.openexchange.hazelcast.network.join.static.nodes'': | |||

# Configures a comma-separated list of IP addresses / hostnames of possible | |||

# nodes in the cluster, e.g. "10.20.30.12, 10.20.30.13:5701, 192.178.168.110". | |||

# Only used if "com.openexchange.hazelcast.network.join" is set to "static". | |||

# It doesn't hurt if the address of the local host appears in the list, so | |||

# that it's still possible to use the same list throughout all nodes in the | |||

# cluster. | |||

com.openexchange.hazelcast.network.join.static.nodes= | |||

For a fixed set of backend nodes, it's recommended to simply include the IP addresses of all nodes in the list, and use the same configuration for each node. However, it's only required to add the address of at least one other node in the cluster to allow the node to join the cluster. Also, when adding a new node to the cluster and this list is extended accordingly, existing nodes don't need to be shut down to recognize the new node, as long as the new node's address list contains at least one of the already running nodes. | |||

=== multicast === | |||

For highly dynamic setups where nodes are added and removed from the cluster quite often and/or the host's IP addresses are not fixed, it's also possible to configure the network join via multicast. During startup and after a specific interval, the backend nodes initiate the multicast join process automatically, and discovered nodes form or join the cluster afterwards. The multicast group and port can be configured as follows: | |||

# Configures the multicast address used to discover other nodes in the cluster | |||

# dynamically. Only used if "com.openexchange.hazelcast.network.join" is set | |||

# to "multicast". If the nodes reside in different subnets, please ensure that | |||

# multicast is enabled between the subnets. Defaults to "224.2.2.3". | |||

com.openexchange.hazelcast.network.join.multicast.group=224.2.2.3 | |||

# Configures the multicast port used to discover other nodes in the cluster | |||

# dynamically. Only used if "com.openexchange.hazelcast.network.join" is set | |||

# to "multicast". Defaults to "54327". | |||

com.openexchange.hazelcast.network.join.multicast.port=54327 | |||

== Example == | |||

The following example shows how a simple cluster named ''MyCluster'' consisting of 4 backend nodes can be configured using ''static'' cluster discovery. The node's IP addresses are 10.0.0.15, 10.0.0.16, 10.0.0.17 and 10.0.0.18. Note that the same ''hazelcast.properties'' is used by all nodes. | |||

com.openexchange.hazelcast.group.name=MyCluster | |||

com.openexchange.hazelcast.group.password=secret | |||

com.openexchange.hazelcast.network.join=static | |||

com.openexchange.hazelcast.network.join.static.nodes=10.0.0.15,10.0.0.16,10.0.0.17,10.0.0.18 | |||

com.openexchange.hazelcast.network.interfaces=10.0.0.* | |||

= Configuration (prior v7.4.0) = | |||

To form a cluster of multiple OX server nodes, different discovery mechanisms can be used. Currently, a static cluster discovery using a fixed set of IP addresses, and a dynamic cluster discovery based on Zeroconf (mDNS) are available. The installation packages conflict with each other, so that only one of them can be installed at the same time. It's also required to use the same cluster discovery mechanism throughout all nodes in the cluster. | To form a cluster of multiple OX server nodes, different discovery mechanisms can be used. Currently, a static cluster discovery using a fixed set of IP addresses, and a dynamic cluster discovery based on Zeroconf (mDNS) are available. The installation packages conflict with each other, so that only one of them can be installed at the same time. It's also required to use the same cluster discovery mechanism throughout all nodes in the cluster. | ||

| Line 99: | Line 183: | ||

== Cluster Discovery Errors == | == Cluster Discovery Errors == | ||

* If the started OX nodes don't form a cluster, please double-check your configuration in the files ''cluster.properties'', ''hazelcast.properties'' and ''static-cluster-discovery.properties'' / ''mdns.properties'' | * If the started OX nodes don't form a cluster, please double-check your configuration in the files ''cluster.properties'', ''hazelcast.properties'' and ''static-cluster-discovery.properties'' / ''mdns.properties'' (prior to v7.4.0) or ''hazelcast.properties'' (v7.4.0 and above) | ||

* It's important to have the same cluster name defined in ''cluster.properties'' throughout all nodes in the cluster | * It's important to have the same cluster name defined in ''cluster.properties'' (prior to v7.4.0) or ''hazelcast.properties'' (v7.4.0 and above) throughout all nodes in the cluster | ||

* Especially when using ''mDNS'' cluster discovery, it might take some time until the cluster is formed | * Especially when using ''mDNS'' (prior to v7.4.0) or multicast (v7.4.0 and above) cluster discovery, it might take some time until the cluster is formed | ||

* When using ''static'' cluster discovery, at least one other node in the cluster has to be configured in ''com.openexchange.cluster.discovery.static.nodes'' to allow joining, however, it's recommended to list all nodes in the cluster here | * When using ''static'' cluster discovery, at least one other node in the cluster has to be configured in ''com.openexchange.cluster.discovery.static.nodes'' to allow joining, however, it's recommended to list all nodes in the cluster here | ||

| Line 107: | Line 191: | ||

The Hazelcast based clustering features can be disabled with the following property changes: | The Hazelcast based clustering features can be disabled with the following property changes: | ||

* Disable cluster discovery by either setting ''com.openexchange.mdns.enabled'' in ''mdns.properties'' to ''false'', or by leaving ''com.openexchange.cluster.discovery.static.nodes'' blank in ''static-cluster-discovery.properties'' | * Disable cluster discovery by either setting ''com.openexchange.mdns.enabled'' in ''mdns.properties'' to ''false'', or by leaving ''com.openexchange.cluster.discovery.static.nodes'' blank in ''static-cluster-discovery.properties'' (prior to v7.4.0) | ||

* Disable Hazelcast by setting ''com.openexchange.hazelcast.enabled'' to false in ''hazelcast.properties'' | * Disable Hazelcast by setting ''com.openexchange.hazelcast.enabled'' to false in ''hazelcast.properties'' | ||

* Disable message based cache event invalidation by setting ''com.openexchange.caching.jcs.eventInvalidation'' to ''false'' in ''cache.properties'' | * Disable message based cache event invalidation by setting ''com.openexchange.caching.jcs.eventInvalidation'' to ''false'' in ''cache.properties'' | ||

Revision as of 11:44, 10 September 2013

Concepts

For inter-OX-communication over the network, multiple Open-Xchange servers can form a cluster. This brings different advantages regarding distribution and caching of volatile data, load balancing, scalability, fail-safety and robustness. Additionally, it provides the infrastructure for upcoming features of the Open-Xchange server. The clustering capabilities of the Open-Xchange server are mainly built up on Hazelcast, an open source clustering and highly scalable data distribution platform for Java. The following article provides an overview about the current featureset and configuration options.

Configuration (v7.4.0 and above)

All settings regarding cluster setup are located in the configuration file hazelcast.properties. The former used additional files cluster.properties, mdns.properties and static-cluster-discovery.properties are no longer needed. The following gives an overview about the most important settings - please refer to the inline documentation of the configuration file for more advanced options.

General

To restrict access to the cluster and to separate the cluster from others in the local network, a name and password needs to be defined. Only backend nodes having the same values for those properties are able to join and form a cluster.

# Configures the name of the cluster. Only nodes using the same group name # will join each other and form the cluster. Required if # "com.openexchange.hazelcast.network.join" is not "empty" (see below). com.openexchange.hazelcast.group.name= # The password used when joining the cluster. Defaults to "wtV6$VQk8#+3ds!a". # Please change this value, and ensure it's equal on all nodes in the cluster. com.openexchange.hazelcast.group.password=wtV6$VQk8#+3ds!a

Network

It's required to define the network interface that is used for cluster communication via com.openexchange.hazelcast.network.interfaces. By default, the interface is restricted to the local loopback address only. To allow the same configuration amongst all nodes in the cluster, it's recommended to define the value using a wildcard matching the IP addresses of all nodes participating in the cluster, e.g. 192.168.0.*

# Comma-separated list of interface addresses hazelcast should use. Wildcards # (*) and ranges (-) can be used. Leave blank to listen on all interfaces # Especially in server environments with multiple network interfaces, it's # recommended to specify the IP-address of the network interface to bind to # explicitly. Defaults to "127.0.0.1" (local loopback only), needs to be # adjusted when building a cluster of multiple backend nodes. com.openexchange.hazelcast.network.interfaces=127.0.0.1

To form a cluster of multiple OX server nodes, different discovery mechanisms can be used. The discovery mechanism is specified via the property com.openexchange.hazelcast.network.join:

# Specifies which mechanism is used to discover other backend nodes in the # cluster. Possible values are "empty" (no discovery for single-node setups), # "static" (fixed set of cluster member nodes) or "multicast" (automatic # discovery of other nodes via multicast). Defaults to "empty". Depending on # the specified value, further configuration might be needed, see "Networking" # section below. com.openexchange.hazelcast.network.join=empty

Generally, it's recommended to use the same network join mechanism for all nodes in the cluster. Depending on this setting, further configuration may be necessary, as decribed in the following paragraphs.

empty

When using the default value empty, no other nodes are discovered in the cluster. This value is suitable for single-node installations. Note that other nodes that are configured to use other network join mechanisms may be still able to still to connect to this node, e.g. using a static network join, having the IP address of this host in the list of potential cluster members (see below).

static

The most common setting for com.openexchange.hazelcast.network.join is static. A static cluster discovery uses a fixed list of IP addresses of the nodes in the cluster. During startup and after a specific interval, the underlying Hazelcast library probes for not yet joined nodes from this list and adds them to the cluster automatically. The address list is configured via com.openexchange.hazelcast.network.join.static.nodes:

# Configures a comma-separated list of IP addresses / hostnames of possible # nodes in the cluster, e.g. "10.20.30.12, 10.20.30.13:5701, 192.178.168.110". # Only used if "com.openexchange.hazelcast.network.join" is set to "static". # It doesn't hurt if the address of the local host appears in the list, so # that it's still possible to use the same list throughout all nodes in the # cluster. com.openexchange.hazelcast.network.join.static.nodes=

For a fixed set of backend nodes, it's recommended to simply include the IP addresses of all nodes in the list, and use the same configuration for each node. However, it's only required to add the address of at least one other node in the cluster to allow the node to join the cluster. Also, when adding a new node to the cluster and this list is extended accordingly, existing nodes don't need to be shut down to recognize the new node, as long as the new node's address list contains at least one of the already running nodes.

multicast

For highly dynamic setups where nodes are added and removed from the cluster quite often and/or the host's IP addresses are not fixed, it's also possible to configure the network join via multicast. During startup and after a specific interval, the backend nodes initiate the multicast join process automatically, and discovered nodes form or join the cluster afterwards. The multicast group and port can be configured as follows:

# Configures the multicast address used to discover other nodes in the cluster # dynamically. Only used if "com.openexchange.hazelcast.network.join" is set # to "multicast". If the nodes reside in different subnets, please ensure that # multicast is enabled between the subnets. Defaults to "224.2.2.3". com.openexchange.hazelcast.network.join.multicast.group=224.2.2.3 # Configures the multicast port used to discover other nodes in the cluster # dynamically. Only used if "com.openexchange.hazelcast.network.join" is set # to "multicast". Defaults to "54327". com.openexchange.hazelcast.network.join.multicast.port=54327

Example

The following example shows how a simple cluster named MyCluster consisting of 4 backend nodes can be configured using static cluster discovery. The node's IP addresses are 10.0.0.15, 10.0.0.16, 10.0.0.17 and 10.0.0.18. Note that the same hazelcast.properties is used by all nodes.

com.openexchange.hazelcast.group.name=MyCluster com.openexchange.hazelcast.group.password=secret com.openexchange.hazelcast.network.join=static com.openexchange.hazelcast.network.join.static.nodes=10.0.0.15,10.0.0.16,10.0.0.17,10.0.0.18 com.openexchange.hazelcast.network.interfaces=10.0.0.*

Configuration (prior v7.4.0)

To form a cluster of multiple OX server nodes, different discovery mechanisms can be used. Currently, a static cluster discovery using a fixed set of IP addresses, and a dynamic cluster discovery based on Zeroconf (mDNS) are available. The installation packages conflict with each other, so that only one of them can be installed at the same time. It's also required to use the same cluster discovery mechanism throughout all nodes in the cluster.

Note: If you change the discovery package on yum-based distributions you need to remove the existing one with rpm -e --nodeps first. yum is not able to replace packages.

Static Cluster Discovery

The package open-xchange-cluster-discovery-static installs the OSGi bundle implementing the OSGi ClusterDiscoveryService. The implementation uses a configuration file that specifies all nodes of the cluster. This cluster discovery module is mutually exclusive with any other cluster discovery module. Only one cluster discovery module can be installed on the backend. When a node is configured to use static cluster discovery, it will try to connect to a pre-defined set of nodes. A comma-separated list of IP addresses of possible nodes is defined in the configuration file static-cluster-discovery.properties, e.g.:

com.openexchange.cluster.discovery.static.nodes=10.20.30.12, 10.20.30.13, 192.178.168.110

For single node installations, the configuration parameter can be left empty. If possible, one should prefer a static cluster discovery against the other possiblities, as it allows a new node starting up to directly join an existing cluster. However, probing for the other nodes could lead to a short delay when starting the server.

MDNS Cluster Discovery

The package open-xchange-cluster-discovery-mdns installs the OSGi bundle implementing the OSGi ClusterDiscoveryService. The implementation uses the Zerconf implementation provided by open-xchange-mdns to find all nodes within the cluster. This cluster discovery module is mutually exclusive with any other cluster discovery module. Only one cluster discovery module can be installed on the backend. MDNS can be enabled or disabled via the mdns.properties configuration file:

com.openexchange.mdns.enabled=true

When enabled, the nodes publish and discover their services using Zero configuration networking in the mDNS multicast group. The services are prefixed with the cluster's name as configured in cluster.properties, meaning that all nodes that should form the cluster require to have the same cluster name. When using mDNS cluster discovery, nodes normally start up on their own, as no other nodes in the cluster are known during startup. Doing so, they logically form a cluster on their own. At a later stage, when other nodes have been discovered, nodes merge to a bigger cluster automatically, until finally the whole cluster is formed.

Especially in server environments with multiple network interfaces, it's recommended to specify the IP-address of the network interface to bind to, e.g.:

com.openexchange.mdns.interface=192.178.168.110

It should be configured to the same IP-address as used by Hazelcast, see hazelcast configuration below. Otherwise, cluster join requests might be targetted to the wrong network interface of the server and the cluster won't form.

Features

The following list gives an overview about different features that were implemented using the new cluster capabilities.

Distributed Session Storage

Previously, when an Open-Xchange server was shutdown for maintenance, all user sessions that were bound to that machine were lost, i.e. the users needed to login again. With the distributed session storage, all sessions are backed by a distributed map in the cluster, so that they are no longer bound to a specific node in the cluster. When a node is shut down, the session data is still available in the cluster and can be accessed from the remaining nodes. The load-balancing techniques of the webserver then seamlessly routes the user session to another node, with no session expired errors. The distributed session storage comes with the package open-xchange-sessionstorage-hazelcast. It's recommended to install this optional package in all clustered environments with multiple groupware server nodes.

Depending on the cluster infrastructure, different backup-count configuration options might be set for the distributed session storage in the map configuration file sessions.properties in the hazelcast subdirectory:

com.openexchange.hazelcast.configuration.map.backupCount=1

The backupcount property configures the number of nodes with synchronized backups. Synchronized backups block operations until backups are successfully copied and acknowledgements are received. If 1 is set as the backup-count for example, then all entries of the map will be copied to another JVM for fail-safety. 0 means no backup. Any integer between 0 and 6. Default is 1, setting bigger than 6 has no effect.

com.openexchange.hazelcast.configuration.map.asyncBackupCount=0

The asyncbackup property configures the number of nodes with async backups. Async backups do not block operations and do not require acknowledgements. 0 means no backup. Any integer between 0 and 6. Default is 0, setting bigger than 6 has no effect.

Since session data is backed up by default continously by multiple nodes in the cluster, the steps described in Session_Migration to trigger session mirgration to other nodes explicitly is obsolete and no longer needed with the distributed session storage.

Normally, sessions in the distributed storages are not evicted automatically, but are only removed when they're also removed from the session handler, either due to a logout operation or when exceeding the long-term session lifetime as configured by com.openexchange.sessiond.sessionLongLifeTime in sessiond.properties. Under certain circumstances, i.e. the session is no longer accessed by the client and the OX node hosting the session in it's long-life container being shutdown, the remove operation from the distributed storage might not be triggered. Therefore, additionaly a maximum idle time of map-entries can be configured for the distributed sessions map via

com.openexchange.hazelcast.configuration.map.maxIdleSeconds=640000

To avoid unnecessary eviction, the value should be higher than the configured com.openexchange.sessiond.sessionLongLifeTime in sessiond.properties.

Distributed Indexing Jobs

Groupware data is indexed in the background to yield faster search results. See the article on the Indexing Bundle for more.

Remote Cache Invalidation

For faster access, groupware data is held in different caches by the server. Formerly, the caches utilized the TCP Lateral Auxiliary Cache plug in (LTCP) for the underlying JCS caches to broadcast puts and removals to caches on other OX nodes in the cluster. This could potentially lead to problems when remote invalidation was not working reliably due to network discovery problems. As an alternative, remote cache invalidation can also be performed using reliable publish/subscribe events built up on Hazelcast topics. This can be configured in the cache.properties configuration file, where the 'eventInvalidation' property can either be set to 'false' for the legacy behavior or 'true' for the new mechanism:

com.openexchange.caching.jcs.eventInvalidation=true

All nodes participating in the cluster should be configured equally.

Adminstration / Troubleshooting

Hazelcast Configuration

The underlying Hazelcast library can be configured using the file hazelcast.properties.

Important:

By default property com.openexchange.hazelcast.network.interfaces is set to 127.0.0.1; meaning Hazelcast listens only to loop-back device. To build a cluster among remote nodes the appropriate network interface needs to be configured there. Leaving that property empty lets Hazelcast listen to all available network interfaces.

The Hazelcast JMX MBean can be enabled or disabled with the property com.openexchange.hazelcast.jmx. The properties com.openexchange.hazelcast.mergeFirstRunDelay and com.openexchange.hazelcast.mergeRunDelay control the run intervals of the so-called Split Brain Handler of Hazelcast that initiates the cluster join process when a new node is started. More details can be found at http://www.hazelcast.com/docs/2.5/manual/single_html/#NetworkPartitioning.

The port ranges used by Hazelcast for incoming and outgoing connections can be controlled via the configuration parameters com.openexchange.hazelcast.networkConfig.port, com.openexchange.hazelcast.networkConfig.portAutoIncrement and com.openexchange.hazelcast.networkConfig.outboundPortDefinitions.

Commandline Tool

To print out statistics about the cluster and the distributed data, the showruntimestats commandline tool can be executed witht the clusterstats ('c') argument. This provides an overview about the runtime cluster configuration of the node, other members in the cluster and distributed data structures.

JMX

In the Open-Xchange server Java process, the MBeans com.hazelcast and com.openexchange.hazelcast can be used to monitor and manage different aspects of the underlying Hazelcast cluster. Merely for test purposes, the com.openexchange.hazelcast MBean can be used for manually changing the configured cluster members, i.e. the list of possible OX nodes in the cluster. The com.hazelcast MBean provides detailed information about the cluster configuration and distributed data structures.

Hazelcast Errors

When experiencing hazelcast related errors in the logfiles, most likely different versions of the packages are installed, leading to different message formats that can't be understood by nodes using another version. Examples for such errors are exceptions in hazelcast components regarding (de)serialization or other message processing. This may happen when performing a consecutive update of all nodes in the cluster, where temporarily nodes with a heterogeneous setup try to communicate with each other. If the errors don't disappear after all nodes in the cluster have been update to the same package versions, it might be necessary to shutdown the cluster completely, so that all distributed data is cleared.

Cluster Discovery Errors

- If the started OX nodes don't form a cluster, please double-check your configuration in the files cluster.properties, hazelcast.properties and static-cluster-discovery.properties / mdns.properties (prior to v7.4.0) or hazelcast.properties (v7.4.0 and above)

- It's important to have the same cluster name defined in cluster.properties (prior to v7.4.0) or hazelcast.properties (v7.4.0 and above) throughout all nodes in the cluster

- Especially when using mDNS (prior to v7.4.0) or multicast (v7.4.0 and above) cluster discovery, it might take some time until the cluster is formed

- When using static cluster discovery, at least one other node in the cluster has to be configured in com.openexchange.cluster.discovery.static.nodes to allow joining, however, it's recommended to list all nodes in the cluster here

Disable Cluster Features

The Hazelcast based clustering features can be disabled with the following property changes:

- Disable cluster discovery by either setting com.openexchange.mdns.enabled in mdns.properties to false, or by leaving com.openexchange.cluster.discovery.static.nodes blank in static-cluster-discovery.properties (prior to v7.4.0)

- Disable Hazelcast by setting com.openexchange.hazelcast.enabled to false in hazelcast.properties

- Disable message based cache event invalidation by setting com.openexchange.caching.jcs.eventInvalidation to false in cache.properties

Update from 6.22.1 to version 6.22.2 and above

As hazelcast will be used by default for the distribution of sessions starting 6.22.2 you have to adjust hazelcast according to our old cache configuration. First of all it's important that you install the open-xchange-sessionstorage-hazelcast package. This package will add the binding between hazelcast and the internal session management. Next you have to set a cluster name to the cluster.properties file (see #Cluster Discovery Errors). Furthermore you will have to add one of the two discovery modes mentioned in #Cluster Discovery.

Updating a Cluster

Running a cluster means built-in failover on the one hand, but might require some attention when it comes to the point of upgrading the services on all nodes in the cluster. This chapter gives an overview about general concepts and hints for silent updates of the cluster.

Limitations

While in most cases a seamless, rolling upgrade of all nodes in the cluster is possible, there may be situations where nodes running a newer version of the Open-Xchange Server are not able to communicate with older nodes in the cluster, i.e. can't access distributed data or consume incompatible event notifications - especially, when the underlying Hazelcast library is part of the update, which does not support this scenario at the moment. In such cases, the release notes will contain corresponding information, so please have a look there before applying an update.

Additionally, there may always be some kind of race conditions during an update, i.e. client requests that can't be completed successfully or internal events not being deliverd to all nodes in the cluster. That's why the following information should only serve as a best-practices guide to minimize the impact of upgrades to the user experience.

Upgrading a single Node

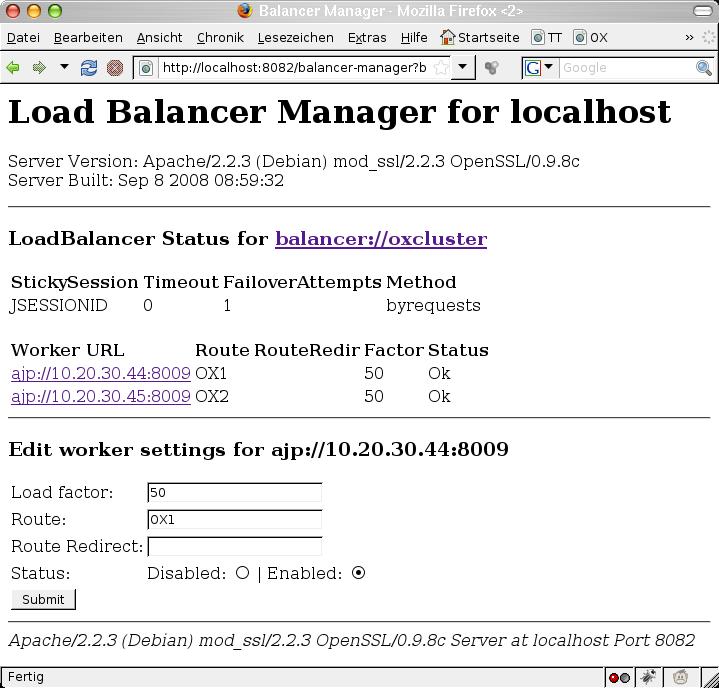

Upgrading all nodes in the cluster should usually be done sequentially, i.o.w. one node after the other. This means that during the upgrade of one node, the node is temporarily disconnected from the other nodes in the cluster, and will join the cluster again after the update is completed. From the backend perspective, this is as easy as stopping the open-xchange service. other nodes in the cluster will recognize the disconnected node and start to repartition the shared cluster data automatically. But wait a minute - doing so would potentially lead to the webserver not registering the node being stopped immediately, resulting in temporary errors for currently logged in users until they are routed to another machine in the cluster. That's why it's good practice to tell the webserver's load balancer that the node should no longer fulfill incoming requests. The Apache Balancer Manager is an excellent tool for this (module mod_status). Look at the screen shot. Every node can be put into a disabled mode. Further requests will the redirected to other nodes in the cluster:

Afterwards, the open-xchange service on the disabled node can be stopped by executing:

$ /etc/init.d/open-xchange stop

or

$ service open-xchange stop

Now, the node is effectively in maintenance mode and any updates can take place. One could now verify the changed cluster infrastructure by accessing the Hazelcast MBeans either via JMX or the showruntimestats -c commandline tool (see above for details). There, the shut down node should no longer appear in the 'Member' section (com.hazelcast:type=Member).

When all upgrades are processed, the node open-xchange service can be started again by executing:

$ /etc/init.d/open-xchange start

or

$ service open-xchange start

As stated above, depending on the chosen cluster discovery mechanism, it might take some time until the node joins the cluster again. When using static cluster discovery, it will join the existing cluster usually directly during serivce startup, i.o.w. before other depending OSGi services are started. Otherwise, there might also be situations where the node cannot join the cluster directly, for example when there were no mDNS advertisments for other nodes in the cluster received yet. Then, it can take some additional time until the node finally joins the cluster. During startup of the node, you can observe the JMX console or the output of showruntimestats -c (com.hazelcast:type=Member) of another node in the cluster to verify when the node has joined.

After the node has joined, distributed data is re-partioned automatically, and the node is ready to server incoming requests again - so now the node can finally be enabled again in the load balancer configuration of the webserver. Afterwards, the next node in the cluster can be upgraded using the same procedure, until all nodes were processed.

Other Considerations

- It's always recommended to only upgrade one node after the other, always ensuring that the cluster has formed correctly between each shutdown/startup of a node.

- During the time of such a rolling upgrade of all nodes, we have effectively heterogeneous software versions in the cluster, which potentially might lead to temporary inconsistencies. Therefore, all nodes in the cluster should be updated in one cycle (but still one after the other).

- Following the above guideline, it's also possible to add or remove nodes dynamically to the cluster, not only when disconnecting a node temporary for updates.

- In case of trouble, i.e. a node refuses to join the cluster again after restart, consult the logfiles first for any hints about what is causing the problem - both on the disconnected node, and also on other nodes in the network

- If there are general incompatibilities between two revisions of the Open-Xchange Server that prevent an operation in a cluster (release notes), it's recommended to choose another name for the cluster in cluster.properties for the nodes with the new version. This will temporary lead to two separate clusters during the rolling upgrade, and finally the old cluster being shut down completely after the last node was updated to the new version. While distributed data can't be migrated from one server version to another in this scenario due to incompatibilities, the uptime of the system itself is not affected, since the nodes in the new cluster are able to serve new incoming requests directly.